Practical Quantum Computing: Why Advanced Quantum Control Holds the Key

As the field of quantum computing continues to advance, two parallel efforts have emerged: the race toward quantum practicality and the race towards fault-tolerant quantum computing. While the first focuses on demonstrating the value of quantum computing in the near term on NISQ processors, the second aims to implement quantum error correction to realize the full potential of quantum computing.

Both of these efforts require integrating classical processing into the quantum computing stack in order to make the most progress. In this blog post, we will explore the importance of such classical-quantum integration and why it is vital for achieving practical quantum computers. Let’s begin by diving into the quantum computing stack and its main layers.

The Quantum Computing Stack

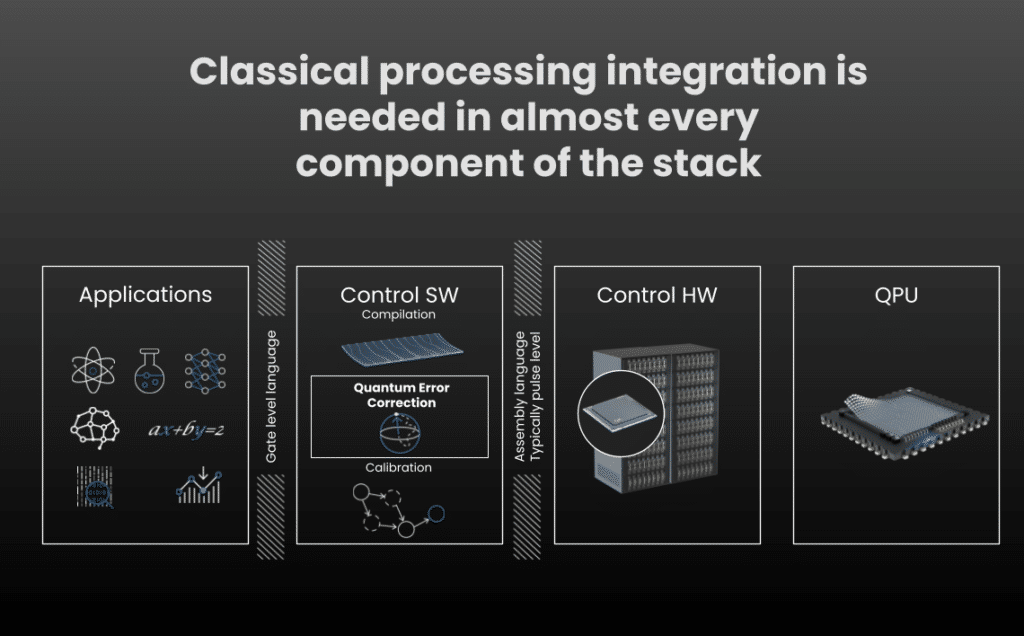

The quantum computing stack includes four major layers (See figure 1):

Figure 1: The Quantum Computing Stack

1) Quantum processors or QPUs: The QPU is where the magic happens. This is where qubits live and where their entanglement is utilized. It is what runs quantum processing.

2) Control hardware: This layer drives the QPU and orchestrates the entire quantum computing system. It’s the quantum memory over which gates and operations are applied. It has several main components:

- Analog front end – the interface between the QPU and us in the outside world. It generates analog signals that are the physical form of the quantum gates and measurements.

- Quantum controller – the brain of the control hardware. It allows sending the right pulses to the right qubits in real-time in a precise and controlled manner.

- Pulse-level language – the interface to the quantum controller, which enables us to precisely define what the control hardware does.

3) Control software: It is responsible for the calibrations that run on top of the pulse-level language. It calibrates and optimizes the native gates of the machine.

4) Application layer: This includes higher-level programming languages and development tools. This is where we run algorithms to solve real-world problems.

To run applications, we must put all of these pieces together. Now, you may wonder, where does quantum-classical integration come in, and why does it matter?

The Role of Quantum-Classical Integration

Why is quantum-classical integration so important? Put simply, it is because nearly every component of the stack requires it (See figure 2). Let’s look at a few examples.

First, let’s discuss calibrations. We typically scan pulse parameters that we want to send to the QPU and then measure the results. We then need classical processing to analyze these results and find the best pulse parameters to perform the desired quantum operations of the quantum gates. Classical processing is tightly interwoven with quantum processing here: We run something on the QPU, change the parameters of the pulse, and then analyze the results.

Next, let’s examine quantum error correction (QEC). In QEC, we must perform mid-circuit measurements. This means measuring some of the qubits in the QPU while the quantum circuit is running. Here, fast classical processing is needed to analyze these measurements and infer how to respond to any errors arising during the quantum circuit’s runtime. Since classical processing must be performed while the quantum program runs, tight integration of classical processing is required.

Finally, let’s consider the applications. Most NISQ applications rely heavily on classical processing to optimize the cost function of parameters within the quantum circuit. Again, we must parameterize the quantum circuit, perform quantum measurements, and then perform classical processing to update the quantum circuit parameters until we minimize the cost function.

These classical processing use cases are interwoven with the quantum processing tasks in various components along the stack.

Figure 2: Quantum-Classical Integration in the Quantum Computing Stack

The challenges of quantum-classical integration

The classical processing tasks mentioned above require various amounts of classical computing resources. For example, in the case of QEC protocols, we may need a super-fast response time for quantum measurements. That would be as fast as hundreds of nanoseconds in the quantum protocol itself. Meanwhile, for variational algorithms, we may need access to heavier classical processing like CPUs or GPUs in the data center, in the cloud, or maybe even in a high-performance computer.

So the more we move away from the QPU, and towards heavier classical processing, the more we pay for the communication time and overhead. These calibrations often take too long, which leads to increased downtimes and decreased performance. In addition, quantum error correction is tough to implement and still has a long way to go. Since development cycles are slow, this will take a long time. As a result, it’s essential to create a hierarchy of classical computer engines for maximum performance. To explore QEC and other applications on a flexible, highly productive software platform, we need better tools.

At Quantum Machines, we are focused on getting the best out of quantum processors and enabling fast time-to-results through an advanced quantum control stack. QM’s hardware includes a scalable and modular analog front end, as well as a pulse processing unit (PPU) designed specifically for quantum control. The PPU generates pulses and quantum measurements and integrates general classical and quantum processing in real time. It’s programmed using QUA, our pulse-level programming language, which allows the development and execution of both quantum and classical operations with precise timing control.

Our hardware also includes coprocessors, stream processing, networking, storage, and caching features to integrate classical processing resources seamlessly. In the software stack, we have Entropy, a platform that manages the flow of classical and quantum programs and integrates with higher layers and open-source standards such as OpenQASM3. To contextualize this, let’s dive into some examples that demonstrate why this platform is so helpful.

Advanced Quantum Control in Action

Lawrence Livermore National Lab

An example from the Lawrence Livermore National Lab involves the Ramsey measurement, which is used to characterize a qubit and calibrate its frequency. Using a traditional control system that cannot perform classical processing to scale parameters very fast, they used to run a particular program in 900 minutes. With QM’s quantum control platform (which we recently upgraded – Say hello to the OPX1000!), they were able to do it in just two minutes. This is thanks to classical processing that makes it possible to scan pulse parameters very quickly. The drop was also due to another form of classical processing, which is responsible for actively resetting the qubits back to a zero state.

The implications of this are important not just because the program runs faster but also because it runs in the control hardware itself. It allows us not only to calibrate much faster (See Figures 3 and 4 below) but also to calibrate much more frequently, thus maximizing system uptime and keeping the system calibrated at all times.

Figure 3: Reduction in data acquisition time with QOP

Figure 4: two dimensional Ramsey map, with varying frequency detuning and interpulse delay time, from which T2* can be inferred.

IBM CLOPS Benchmark

A second example is enhanced QPU utilization. Here, let’s look at the CLOPS benchmark from IBM. This benchmark not only measures the QPU but also reflects the controller architecture and the entire system performance.

While these are preliminary results, we tested CLOPS with our platform when the new benchmark was announced. We successfully performed the entire CLOPS benchmark using our control hardware without having to go out and access heavier classical computing resources. This allowed us to process at a rate of 10,000 CLOPS. And this is just the beginning.

Programming Quantum Error Correction Protocols

Finally, let’s discuss quantum error correction. At QM, we have deep roots in quantum error correction. In fact, the first quantum error correction demonstration in history was performed by none other than our Chief Engineer, who is also one of QM’s founders, Dr. Nissim Ofek.

However, running quantum error correction successfully, even for just a single logical qubit, is incredibly complicated. For example, suppose you want to shorten the cycle time. In that case, you need a way to program protocols from a much more flexible software platform without compromising hardware performance.

Figure 6: Magic state distillation with cat codes [Chamberland, Christopher, and Kyungjoo Noh. et al. “Building a fault-tolerant quantum computer using concatenated cat codes” PRX Quantum 3, 010329 (2022)]

We recently implemented a magic state distillation protocol suggested by AWS in a December 2020 paper on cat codes to illustrate this approach (see figure 6). This example shows how essential magic states are for performing critical gates on logical qubits that are quantum-error-corrected. It also shows this protocol is quite complicated in terms of the control flow. It must run in real time, and classical computing must also run in real-time. Doing this involves much classical processing and control flow, repeated success loops, and other complex aspects of control. For these tasks, we used QA code (figure 7) that matches AWS’s proposed pseudocode almost line-by-line. This highlights how well QUA can operate complex quantum workloads requiring tight classical processing integration.

Below, you can see the QUA code for magic state preparation which closely resembles AWS’s proposed pseudocode.

def ec_stop(logical_qubit):

with while_(test==False):

with if_(n_diff == t):

assign(test, 1)

assign_vec(synPrevRound, synCurrRound, size=code_distance - 1)

measure_ZL_syndrome(logical_qubit, synCurrRound)

with if_(countSyn > 1):

with if_(

compare_synd(synCurrRound,synPrevRound,size=code_distance-1)):

assign(SynRep, SynRep + 1)

assign(n_diffInc, 0)

with else_():

assign(SynRep, 0)

with if_(n_diffInc == 0):

assign(n_diff, n_diff + 1)

assign(n_diffInc, 1)

with else_():

assign(n_diffInc, 0)

with if_(SynRep == t - n_diff + 1):

assign(test, 1)

assign(countSyn, countSyn + 1)

correct_code(qubit=logical_qubit, syndrome=synCurrRound)Figure 7: QUA code for magic state preparation

Figure 8: STOP Algorithm pseudocode from AWS’ paper. It’s very simple to run this code in QUA. [Chamberland, Christopher, and Kyungjoo Noh. et al. “Building a fault-tolerant quantum computer using concatenated cat codes” PRX Quantum 3, 010329 (2022)].

The long road to practical quantum computing

Over the next few years, we are faced with the unique opportunity to learn, explore, and find the most effective ways to run calibrations or mitigations and improve quantum error correction. By doing so, we can ensure that our hardware is not only optimal for a specific task but also flexible enough to make quantum computing practical. After all, new ideas are constantly emerging, requiring new underlying capabilities from the control stack.

We must have a flexible platform that allows us to shorten the development cycle time of trying out new ideas, learning from them, and repeating this process, many times. At Quantum Machines, we’re pushing toward this exact future with the Quantum Orchestration Platform that enables tight quantum and classical processing integration.

—

[1] Chamberland, Christopher, and Kyungjoo Noh. et al. “Building a fault-tolerant quantum computer using concatenated cat codes” PRX Quantum 3, 010329 (2022)